AI has been around for many years, formally, since the 1950’s, when it was described as the ability for a machine to perform a task that would otherwise require human intelligence. In recent years, AI has been employed in organizations and technology products for various purposes, such as, fraud detection, malware detection, improved lending decisions, making better business decisions, self-driving cars and chatbots. SixThirty has already invested in a number of portfolio companies that have integrated AI into their products.

AI is having its web browser moment….

David Fairman, Venture Partner and CISO-in-Residence at SixThirty, who has held leadership positions at NAB, Royal Bank of Canada, JP Morgan Chase and Royal Bank of Scotland, explains: “Since late 2022, the world has seen a transformation on how AI can now be accessed by the masses, with a very low barrier of entry. Prior to this time, in order to use AI, organizations required very specialist skill sets and a large amount of training data. Generative AI (GAI) has removed those barriers, and with services like ChatGPT, Google Bard and the new Bing Chat, the accessibility of this technology to the masses is proving to be transformational for businesses.” But will this technology live up to the hype? Take Blockchain for example — it has struggled to realize the full benefits that it promised.

No surprise then that the announcement of the EU AI Act, the European Union has set the alarm bells ringing globally. We asked Dr. Sindhu Joseph, founder & CEO of CogniCor about the key takeaways from the Act for regulating AI. (CogniCor is a Palo Alto, California-based startup which develops AI-powered customer assistants and business automation technology for financial advisors, e.g. the RIA affiliates of Charles Schwab.)

Sindhu highlighted the following:

- The AI Act adopts a risk-based approach, restricting AI applications based on their predicted danger, and requires businesses to submit risk assessments for their AI use.

- It prohibits Emotion-Recognition AI, i.e. using AI to recognize emotions in policing, schools, and workplaces. While some argue that emotion-recognition AI can identify student comprehension or driver fatigue, the ban aims to address accuracy and bias concerns.

- It also bans Real-Time Biometrics and Predictive Policing in public spaces, although enforcement details remain to be sorted out among EU bodies, as policing groups argue for the necessity of these technologies

EU AI Law is perhaps too early- and unfair to smaller firms

Sindhu felt it was a bit early to roll out an AI Law, and cited the following concerns:

- It can put potential buyers of (AI-driven) Enterprise SaaS vendors on high alert and postpone adoption. This will put smaller but innovative providers at a disadvantage as buyers would trust larger tech firms to build in all of these regulations within their AI offerings.

- The AI Law is being rolled out, but there are no platforms or services to assess or measure these factors. An example would be detecting sentiment recognition and emotional bias in response. For example, how do you check if an AI embedded service is recognizing emotions that are built into its algorithms?

- The biggest threat is that entire classes of (potentially useful) applications get eliminated from the realm of possibilities. For example, an AI-driven therapist that has to recognize emotions and respond based on emotions from the get-go, would never be a reality in Europe. AI applications will not be well-rounded without enough funding for some areas because of these restrictions. VC firms and health innovators would shy away from a perceived corporate risk.

- Finally, the law could skew the “AI world order” as countries like China go all out on AI, combined with biometric identification.

On the other hand, she is upbeat on some aspects of the law:

- Europe has more than once in the recent past taken the lead on equity, societal happiness, and demonstrated their willingness to forego potential profit for the wellbeing of their people. If other countries can emulate these, we may have a way to co-exist with AI without bringing much harm upon ourselves.

- At a micro level, whether it’s good or bad, it brings clarity to the stakeholders, allowing the private sector to direct investments that are viable from the AI law standpoint.

- The law will trigger more academic research into solving some of the concerns which prompted the law in the first place and thereby result in frameworks for development of safer systems

In her understanding, the law does not cover who is responsible under certain circumstances, for example, in autonomous driving, the decision maker may be AI, but it is unclear who would bear the responsibility in case of an accident. Accountability with respect to AI is a topic that needs to be addressed as we move into a Responsible AI mindset.

Large enterprises will look to batten down the hatches first

According to Samarth Shekhar, Regional Manager EMEA at SixThirty, who has evaluated and invested in startups in the Responsible AI/ Generative AI space: “The outcry against the EU AI Law is understandable, but perhaps the right antidote to a potential Wild West of AI where black-box AI models unleash bias, unfairness, data poisoning and leakage all the way to potentially existential threats.”

For high-risk systems, the stakes will be high as the EU AI Act stipulates:

- They must have human oversight to minimize risks, and show that human discretion is part of the AI system’s deployment.

- They will be subject to transparency requirements, and will be required to manage biases effectively

- They have to maintain thorough documentation- e.g. records of programming and training methodologies, data sets used, and measures taken for oversight and control- to demonstrate regulatory compliance

- Non-compliance can lead to substantial fines- ranging from €35 million or 7% of global turnover to €7.5 million or 1.5% of turnover, depending on the infringement and company size- and sanctions

He foresees the most relevant AI innovations in financial services dominated by specific and hyperlocal AI models that are trained on specialized data (think insurance claims, financial fraud etc.) and showing up in the application layer- the interfaces and workflow tools that make AI models accessible in a way that enables business customers or consumers. “Expect to see more of the industry-specific, vertical SaaS and “every company will be a FinTech company” trend, but while low-risk, repetitive jobs get automated, expect plenty of AI co-pilot and “human in the loop” applications in regulated sectors like finance and healthcare.”

AI Co-pilots Taking Off?

Financial institutions are already putting Generative AI to test:

- Getting financial advisors to use co-pilots to be more productive, analyzing customer data to offer tailored financial planning or investment suggestions, or expanding (profitably) to previously unserved customers.

- Lending teams can use co-pilots to gather customer data, guiding customers to complete the form, but also powering credit scoring models that can ingest and process a wider range of data points.

- Investment analysts are testing co-pilots to analyze event transcripts, earnings calls, company filings, macroeconomic reports, regulatory filings etc. to put together capital markets research or pitchbooks

- Insurers see immense potential in Generative AI helping summarize and synthesize content from policies, documents or other unstructured content, as well as answer questions or create new content based on the analysis

- Generative AI-powered chatbots can enhance customer support- taking over routine queries and tasks, bringing in humans as needed, and supporting them with product discovery, recommendations and sales

One thing is clear, Samarth adds: “Large enterprises in finance or healthcare will look to batten down the hatches first, before rolling out AI to clients, or anywhere beyond the back-office. We are as excited about the “boring” pieces- keeping regulated enterprises and their customers safe — as we are about the innovations that AI would bring to these sectors, and are about to announce our first investment in this space.”

Boring is Big

Financial institutions’ concerns about Generative AI range from data privacy and security, data poisoning, reverse engineering, deep fakes, and increasing AI regulation. We are seeing existing information security players expand to cover Generative AI risks, but also “native” startups offering Generative AI security or firewalls.

However, despite all possible measures, there is the residual risk of things going awry, and as AI governance and regulations get formalized, enterprises could be exposed to face fines, litigations or sanctions.

Finally, financial institutions don’t want their proprietary data to be training someone else’s language model. We expect to see more enterprise grade offerings tailored to financial services that can bring home the rewards while reducing the risks.

A Responsible AI Checklist for Enterprises?

David believes the EU AI Act is a first step to formalize Responsible AI — a set of practices that ensures AI systems are designed, deployed and used in an ethical and legal way, at scale. When companies implement responsible AI, they minimize the potential for artificial intelligence to cause harm and make sure it benefits individuals, communities and society. As a result of the explosion and widespread accessibility to AI, the topic of Responsible AI has gained significant impetus. Responsible AI was a significant topic before Generative AI, but its impact now as a result of the transformation driven by Generative AI, is more important than ever before.

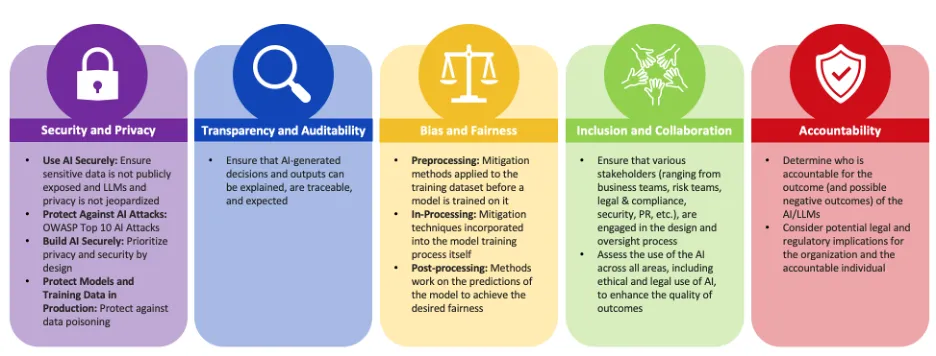

David suggests that the key pillars of Responsible AI are as follows:

Some of the questions David is encouraging regulated enterprises to ask themselves:

- How is your organization testing (assuring) for model robustness?

- How are you protecting the model from adversarial attacks where the existing cyber security control stack leaves gaps?

- How is your organization testing (assuring) for model explainability?

- Are you doing either of the above in real-time or are these periodic assessments?

- How is your organization detecting for bias in the model?

- How is your organization validating the integrity of the data and protecting from data poisoning?

- How is your organization insuring itself against potential fines or lawsuits if — despite all measures — AI models fall short of perfect?

Organizations that embark on the responsible AI journey sooner rather than later will find themselves able to adapt quicker and respond to evolving regulation. We know that compliance can be expensive and a burden for organizations so getting on this front foot could help keep the compliance costs down, but more importantly, drive a race to the top for AI safety, which undeniably will be a key differentiator in the market.

“Finally, AI protection is always going to be playing catch-up with AI threat and regulations”, Samarth adds, “Organizations should take note of players like Armilla Assurance (one of our recent investments) who have stood up insurance and warranty products for enterprises as well as third party vendors for when- despite all efforts — AI models go awry.”

Dustin Wilcox, Venture Partner and CISO-in-Residence at SixThirty, wraps it up well “It will be fascinating to see how this translates into final rules, the explicit technical controls and reporting requirements needed to comply. Those are likely to have a major influence on the pace and direction of innovation in this space. Expect California to fast-follow on this path.“